Though we may not realise the full extent of its influence, artificial intelligence (AI) has been playing a significant role in all of our lives for many years. While we may not yet be guided by the dulcet tones of a HAL computer voice à la 2001: A Space Odyssey, it is now estimated that over half of UK homes have some form of virtual assistant, such as Alexa, Siri, Cortana or Google Assistant (figure 1). These innovations are still considered a novelty by many, but we have all relied on some form of artificial intelligence in many household appliances and in our cars for years; controlling temperatures, monitoring energy usage, helping to search the internet, and recommending partners.

While all of this is likely to be viewed positively, there is some concern about the long-term implications upon jobs. Where previously a human might have been employed to recommend a holiday, offer a service or make a medical diagnosis, this role might now be replaced by an electronic device.

The Office for National Statistics (ONS) analysed the jobs of 20 million people in England in 2017 and found that 7.4% are at ‘high risk of automation.’1 The three occupations with the lowest risk of automation were medical practitioners, higher education teaching professionals, and senior professionals of educational establishments. These occupations are all considered high skilled. Among the health professions, vets seemed to be most vulnerable, while eye care practitioners were less so; ‘ophthalmic opticians’ were calculated as having a reasonably low risk of 0.266 for replacement, with dispensing opticians considered more vulnerable, with a risk value of 0.453.

But these sorts of calculations ignore some of the many benefits offered by automation in healthcare. For many years, it was assumed, with good reason, that accurate diagnosis by a clinician is more likely with experience. Because no patient is alike, the more exposure one has to the range of presentations of any specific disease, the more likely the correct diagnosis is to be made. If one had access to a massive database of signs and symptoms that might be analysed in seconds, it should be possible to assess the signs and symptoms of one individual as compared with this large database and arrive at the best diagnostic conclusion.

So, a more positive view of the rise of automation might be to embrace the improved analysis of a much greater amount of data, which may guide a clinician to arrive at a more accurate conclusion and, importantly, to be able to spend more time upon a useful interaction with the patient to ensure that the best possible outcome is achieved. Indeed, recent studies have tended to show that eye care professionals are optimistic about the use of AI in their professional work. For example, one study surveyed over 400 optometrists (in the US).2,3 The key findings were:

- Around half of optometrists (53%) surveyed had some concerns about the diagnostic accuracy of AI

- The majority (72%) agreed that AI would, overall, be of benefit to eye care practice

- An overwhelming majority (83%) felt that AI needs to be incorporated into the education curricula of eye care teaching institutions

- 65% believed AI should play a greater role in clinical practice; this increase seems to have been boosted during the period of lockdown due to the Covid-19 pandemic

Definitions and Terminology

There are a number of terms that are constantly used that deserve some clarification whenever there is discussion of AI.

Artificial intelligence (AI)

AI might be defined usefully as the simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions) and self-correction.4 Whether true AI has yet been achieved is a debatable point. The great mathematician Alan Turing suggested the Turing test, originally called the imitation game, in 1950.5 This suggested that the true test of a machine’s ability to exhibit intelligent behaviour is when it is equivalent to, or indistinguishable from, that of a human. Turing proposed that a human evaluator would judge natural language conversations between a human and a machine designed to generate human-like responses. Whether any AI system has yet to achieve this is a topic for much discussion.

Machine learning

Machine learning is an application of AI that provides systems with the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning focuses on the development of computer programs that can access data and use it to learn for themselves. A good example of machine learning is the use of cumulative normative databases in suggesting diagnosis or highlighted any data that falls outside a set considered to be normal.

Algorithm

A step-by-step mathematical method of solving a problem. It is commonly used for data processing, calculation and other related computer and mathematical operations. It is invariably algorithms at the heart of most AI. Most electronic equipment used in eye care will have some form of algorithmic processing integral to its operation.

Neural network

A series of algorithms that endeavours to recognise underlying relationships in a set of data through a process that mimics the way the human brain operates. A deep neural network is one with a certain level of complexity; in other words, a neural network with more than two layers. Deep neural networks use sophisticated mathematical modelling to process data in complex ways.

Current Use

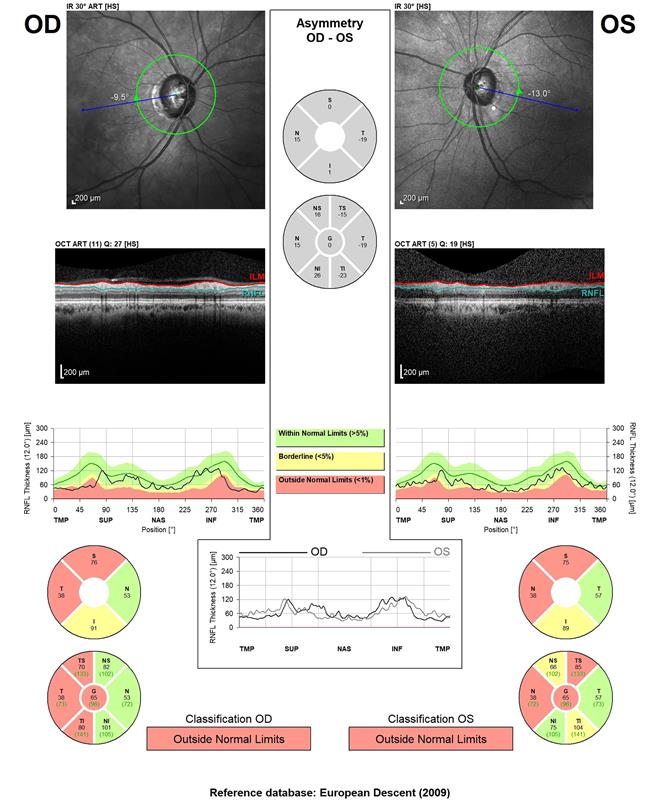

ECPs will use some form of AI and machine learning everyday. Instruments that compare data measured with a stored normative database, and so indicate where a patient falls outside predicted normal limits, are examples. OCTs often display data this way. For example, when showing the thickness of the retinal nerve fibre layer, a graph is displayed that shows the average normal thickness and then the thicknesses of the patient (figure 3). Colour coding is also commonly used to imply where values fall outside the normal range (red) or are borderline to normal (yellow).

Figure 3: The RNFL display of an OCT showing colour coding to represent variation from a normative database

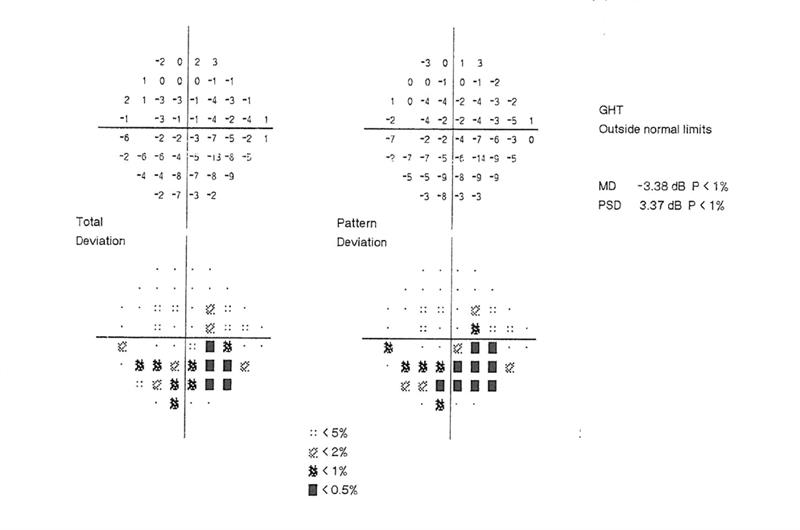

Another example of machine learning to indicate what is normal is to be found in most automated fields analysers, such as the Humphrey. Data outputs may show a ‘total threshold’ plot, where retinal sensitivity is compared with that of a large database of age-matched norm, a ‘pattern threshold’, depicting where fluctuations within the same field are outside expected values, along with indications of the probability of any variation being down to chance (figure 4).

Figure 4: Section of a Humphrey VFA printout

Machine learning and algorithmic data analysis may also support clinical decision-making. The assessment of patients to decide on the likelihood of them either having or likely to develop chronic open angle glaucoma (COAG) must take into account a great many risk factors and clinical measurements and signs. For this reason, protocols are in place to minimise the ever-present risk of false positives and negatives in the referral process from primary to secondary care. Never has this been more important than in these cash-strapped times.

In one study from West Kent,6 a Bayes algorithm was used to see how a data analysis of a set battery of clinical outcomes (such as pachymetry, threshold fields, disc analysis and contact tonometry; figure 5) to decide on referral might compare with decisions made by clinicians alone.

Figure 5: Statistical analysis of clinical data to decide on referral compares favourably with decisions taken by clinicians

The results from this study were eye catching. Outcomes of cross-validation, expressed as means and standard deviations, showed that the accuracy of Bayes’ was high (95%, 2.0%) but that it falsely discharged (3.4%, 1.6%) or referred (3.1%, 1.5%) some cases. The authors therefore concluded that the results indicate that Bayes algorithmic analysis compared favourably with the decisions of specialist optometrists.

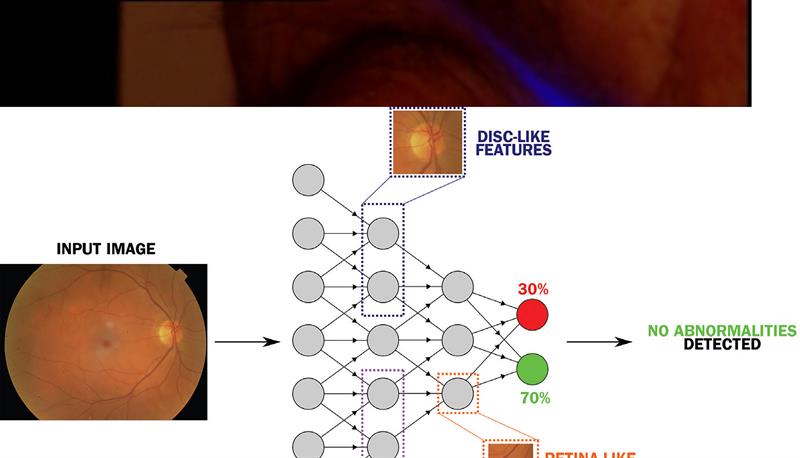

There have also been significant strides forward in the use of neural networks to enhance the interpretation of clinical images (figure 6). For example, systems such as the Pegasus (from AI company Visualytix) have been developed to detect abnormal appearances in retinal images or in disc profile. Studies again suggest that they compare favourably with detection rates from clinicians alone.7

Figure 6: Schematic representation of an artificial neural network designed to identify anomalies in eye health

There are many benefits for the patient who, by being able to access data gathering apps for use in their home life are able to provide much greater levels of data over time for the clinician to interpret at a time where access to clinics is restricted. Patients can also benefit from alarms indicating the need for intervention when needed, reminders for check-ups and simple diagnostic indicators for nudging changes in lifestyle. Typical examples of such systems are to be found on most modern smartphones and watches.

Advantages and Disadvantages

A number of disadvantages of machine learning in healthcare have been identified:4

- Training and testing on data that is not clinically meaningful or biased: the data analysis is only as relevant as the data gathered in the first place

- Lack of independent blinded evaluation on real-world data

- Narrow applications that might not be easily generalised to clinical use

- Inconsistent means of measuring performance of algorithms

- Commercial developers’ hype may be based on unpublished, untested and unverifiable results

The many advantages, including better use of resources, have already been noted. However, AI in healthcare is a new and rapidly growing area. However, to quote the Academy of Medical Royal Colleges: ‘Artificial intelligence should be used to reduce, not increase, health inequality – geographically, economically and socially.’

Books

Usually, the best references for developments in AI are to be found online, something that reflects the rapidity of the evolution of AI in healthcare. I have, however, come across a couple of textbooks worthy of a look.

For the patient

The Future You by Harry Gorikian is written by a journalist with a background in AI and healthcare. If you can stomach some of the more extreme Americanisms within, the book offers a good overview of some of the key areas of healthcare where AI has had a positive impact and what we might expect in the near future. Importantly, it does not patronise the reader while assuming them to have a limited knowledge of technology.

For the clinician

Dr Anthony Chang’s comprehensive text, Intelligence-Based Medicine: Data Science, Artificial Intelligence, and Human Cognition in Clinical Medicine and Healthcare, provides an excellent and up-to-date overview of artificial intelligence concepts and methodology, and includes multiple examples of real life applications in healthcare and medicine. Well worth a read.

Final Point

Before we all run to the hills, having been replaced by a Skynet of automated, healthcare number crunchers, remember one point. There is a good reason why the healthcare professions are considered lower risk of automation by the ONS. Achieving an accurate diagnosis based on data, or even developing an appropriate management plan based upon the diagnosis, does not mean that this result is easily conveyed to a patient in a way that is fully understood, appropriately implemented or followed. As long as patient compliance is a challenge, I suggest that the communication skills of a human clinician will always be required.

References

- Office for National Statistics. Which occupations are at highest risk of being automated? https://www.ons.gov.uk/employmentandlabourmarket/p... andemployeetypes/articles/whichoccupationsareathighest riskofbeingautomated/2019-03-25

- Shorter E et al. Evaluation of optometrist’s perspectives of artificial intelligence in eye care. Invest. Ophthalmology and Vision Science, 2021;62(8):1727

- Scanzera AC et al. Optometrist’s perspectives of Artificial Intelligence in eye care. Journal of Optometry, 2022;15, Suppl 1:S91-S97

- Academy of Medical Royal Colleges. Artificial Intelligence in healthcare. Downloadable from: https://www.aomrc.org.uk/reports-guidance/artifici...

- Turing, A. Computing Machinery and Intelligence. Mind, 1950, (236): 433-460

- JC Gurney et al. Application of Bayes’ to the prediction of referral decisions made by specialist optometrists in relation to chronic open angle glaucoma. Eye, https://doi.org/10.1038/s41433-018-0023-5

- Trikha S et al. Deep learning takes to the air. Optician, 04.08.2017, pp 22-23