Problem framing

‘If I were given an hour in which to do a problem upon which my life depended, I would spend 40 minutes studying it, 15 minutes reviewing it and 5 minutes solving it.’ – Albert Einstein

Problem framing emphasises a focusing on the problem definition and it is the MOST important thing because how a problem is defined will shape approaches towards its solution. The truth is that you will seek what you set yourself up to seek and you will find what you set yourself up to find. It follows that good solutions are dependent on correct definitions of the problem that in turn are dependent on a deep understanding.

Making an effort to any challenges to fully understanding the problem and its implications is time well-spent. Typically, the most obvious solutions readily come to mind, and our minds work in reverse to construct the associated question and then tell us that we have done a good job. This is a fool’s trap because a perfect answer to the wrong question is always the wrong answer.

Here are some tips to help realise the salient question:

Focus on questions and not diagnoses

Every patient presentation is to some degree unique, not only in clinical features but also with regard to the individual person affected. In framing a problem, context is everything and understanding this always trumps a diagnostic label. Our ability to make a good decision is predicated on being able to define the question whose answer will tip our behaviour. Errors in framing will lead to errors in action.

For example, when a female patient presents with cataract, the key question is not usually ‘does she have a cataract?’ or even the more nuanced ‘what is the degree of cataract?’; more typically, it would be something like ‘would removal of the cataract enable her to continue driving?’ or ‘is there any more serious eye disease that is co-existing with the cataract?’.

Another example might be that of a hyperopic child who is noted to have what might be a raised optic nerve head. In this situation, the focus should not be on deciding the most probable cause, but on excluding the less likely, but far more worrisome, possibility of papilloedema. In these cases, our actions are best determined by our confidence in deciding what it is not, rather than what it likely is.

Concision

Concision in framing will often direct us towards the right solution. When we cannot keep it simple we probably have not reached the root of the problem. When puzzled by a complex problem with many strands, it is useful to imagine explaining it to a layperson without using any jargon – first, as it comes to us as a messy stream of consciousness, then again with structure, then again in an action-orientated single sentence, and finally in no more than a handful of words.

Clinicians need to parse problems and convert them from descriptions into directed actions.

Research and collect information

We cannot address what has been overlooked, and when our understanding is superficial it is not possible to be sure that everything relevant is being taken into account. A complete understanding requires us to both explore the problem as it is presented and to recognise its context; it needs us to go deeper and outwards. Clinicians ought to not be limited to managing eye problems, but to broaden their perspective to manage individuals with a whole range of problems that happen to include one affecting their eyes.

Trying to solve a problem without grasping the fundamentals of the specific situation is like trying to write a novel without knowing the alphabet or the rules of grammar, or the mechanisms of publishing and marketing. When researching problems, it is necessary to both zoom in to view the detail and zoom out to appreciate the bigger picture.

Challenge assumptions

We all have an overly simplistic mental picture of the world that acts as a template of expectation that influences our perception. Observations that match our internal template are casually accepted as fact, whereas those that do not are readily dismissed. When framing a problem, we need to ask ourselves what we really know to be facts and what we are assuming.

For example, when tonometry indicates a high IOP it should be remembered that the number is an estimate, whatever method is used. The true value will only be achieved if we lie the patient down, puncture the cornea with a capillary tube that has been partially filled with mercury and measure the height to which the column is raised. The assumptions habitually made for tonometry are more based on convenience than physics, and while some assumptions may be refined (such as considering central corneal thickness) others cannot (such as corneal rigidity).

Of course, some assumptions are necessary for practicality, but nonetheless they are assumptions and in some circumstances their validity will be strained. For example a cornea thickened by stomal oedema may have an unusually low mechanical resistance to deformation because the increased thickness is due to an influx of more water, rather than more cross-linked collagen. The converse situation may occur in a cornea thinned by disease but has been accompanied by stromal scarring.

Manage yourself as a priority

We have more control over our own thoughts and circumstances than others and their situations, and so efforts made to manage ourselves are usually more effective at producing change. Also, as with many things in life, it is a more efficient strategy to address our current behaviours that we know to be harmful before adopting good behaviours in the hope that they will make up for deficiencies. Analogous to a good diet, it is more important to stop eating badly before starting to load up on superfoods.

Keep up-to-date with knowledge

It is a given for all decision makers operating in a specialist area that they need to have comprehensive knowledge within their speciality. It is inexcusable for us to act otherwise, especially when people are trusting us with the wellbeing of their vision and eyes. We all need to know our stuff relevant to our scope of practice, but also we need to be honest, and admit to and address large gaps in our knowledge. The safety of a clinician is more dependent on what they least know, rather than what they know the most, in much the same way that a chain is only as strong as its weakest link.

What we knew in the past is less important than what we know today. Knowledge decays. Not only do we forget things, but ‘facts’ change all the time: the Earth was flat, smoking was once doctor-recommended, and Pluto was a planet. When I qualified as an optometrist, the treatment of normal tension glaucoma was contentious. There was no treatment for wet AMD, and the cornea only had five layers.

It is helpful to consider the ‘Knowledge Half-Life’ concept which refers to the amount of time it takes before half the knowledge or facts in a particular area becomes obsolete or is superseded by new facts. We cannot rest on our laurels and depend upon previous achievements and qualifications. Someone who gained a PhD in contact lens practice 20 years ago may know absolutely zero about modern silicone hydrogel daily disposables.

The good news is that investing in yourself is guaranteed to produce a profitable return that builds over time.

Remove barriers to being smart

The human brain is the most complex of structures. Its formidable repertoire includes controlling our bodies, looking after the essentials of energy generation, reproduction and overall survival, storing information for over a century if needed, communicating in languages of staggering complexity and, uniquely, having awareness of its own functioning and existence. And yet, time and time again, we cannot escape the daily reminders that we so often do things that are incredibly stupid.

We are not machines. However, like machines, there are limits to what our brains can and cannot do. Our thinking is like a juggler keeping balls in the air. If more balls are introduced, rather than the juggler realise their limit and stop accepting more, they will continue to the point where they fail catastrophically. As with our computers running with too many tabs open, our brains are liable to freeze and crash. When we force ourselves to do too much, too fast, for too long, we drop everything and become dumb and possibly dangerous.

The trick to avoiding this is to admit that consideration thinking is a finite resource. We should give some consideration to what we think about and take active steps to shape our working environments to limit our exposure to unnecessary and unsustainable mental effort. Nobody is capable of working at close to their operational limit all the time. If we try or are forced to do so, we will fail and end up worse than stupid – worse, because it is our fault or we have been complicit. The only variables in this scenario are the duration and extent of the disaster. Do not set yourself up to fail.

We all know that unrealistic time pressures, multi-tasking and interruptions consume our mental resources such that we are less able to work to our full potential and respond instead to unexpected stresses. In fact it is much easier and more effective to remove barriers to being smart than to actually be smart.

If you want to be better at making clinical decisions, you need to take responsibility for setting up your working environment to avoid unrealistic time pressures, multi-tasking and unnecessary interruptions. How tough this can be must not be under-estimated because it usually involves negotiating conflicting personal priorities with different objectives within power hierarchies. It is often bemoaned that this responsibility falls unfairly upon individual clinicians, which is a valid grievance, but needs to be accepted within the definition of being a clinician.

Creating a working environment that is conducive to thinking to our full potential is not simply about longer one-on-one testing times with patients. For example, it may be feasible to negotiate safety breaks as have been accepted as necessary in some occupations like air traffic control and professional drivers. When possible, clinicians should defer some decisions to suitably trained colleagues, and when not, make every effort to make it possible.

Unscheduled interruptions must be limited, with non-essential consultations being made at times after a clinic or at specified times set aside within a clinic. There may also be scope to organise clinics to reduce the randomness of their case-mix so the minds of clinicians do not have to rapidly flip-flop between different modes of working, (for example, children, contact lens aftercares, dilations and so on), or maybe certain activities can be aggregated (such as ordering,m verification, collections, staff performance reviews and so on). Of course, with regard to our working environments, the ideal is never possible, but that is not a valid reason for not doing something and it is always the case that at least something can be improved.

Actively seek feedback

The most reliable way that we learn and develop our patterns of behaviour is through feedback because it resonates more deeply than any textbook or lecture ever will as it has personal relevance. It follows that clinicians should actively seek feedback (what the real-world outcomes were as a result of a particular action) rather than satisfying themselves that they followed a guideline or assuming the absence of a complaint is equivalent to an endorsement.

We should not rely on guidelines or complaints because the former have limits in their applicability and are, through necessity of brevity, always at least in part incomplete. We cannot rely on complaints because most non-optimal outcomes remain hidden because they do not attain the threshold needed for them to be raised.

Actively seeking feedback is more than just being receptive to feedback when it is presented , but instead should involve us going out of our way to find out what happened. In practice, it involves taking the time to chase referrals and, most usefully, to routinely take the time to contact patients and ask them how things turned out. This can easily be achieved with a telephone call. This not only helps you, but also lets the patient know that you are genuinely interested in them as an individual, rather than just a patient.

It is also very helpful to make a regular habit of discussing clinical decisions with fellow optometrists. When doing this, it is often more useful to focus on process and underlying thinking, rather than outcomes. What was done and why it was done, rather than simply what happened.

A general recommendation is to audit outcomes with patients and review processes with peers.

Pre-packaged solutions

Prepared thinking

The speed and apparent effortlessness of decision making by skilled clinicians is daunting.

An explanation for the seemingly too-quick-to-be-possible decision making can be found within a story recounted to me by a specialist periodontist. When a patient complained that the examination fee was extortionate when he had made a diagnosis in less than 30 seconds, he replied that it was not the length of the consultation that was being paid for, but rather the 30 years of mindful clinical work and study that enabled him to make the correct diagnosis so quickly.

The point is that he had done his preparation and clinical thinking beforehand. Similarly, good clinicians use pre-packaged solutions that make use of thinking undertaken before their patients arrive. This avoids having to go back to first principles every time and simplifies decisions to pattern matching and verifying the applicability of previously established solutions.

Most clinicians have internalised many such patterns of action; indeed, the number and nuanced complexity of these pre-packaged solutions is the hallmark of experience that has not been wasted. These packages of behaviour may cover the investigation of particular, or clusters of, symptoms or signs to formulate a diagnosis, or the management of a diagnosed condition.

Examples in my repertoire include what to do when a patient presents with symptoms of double vision, intolerance to new spectacles, or an optic nerve head suspicious for glaucoma. With repeated use, these split and become more specific, such as what to do when a patient presents with symptoms of sudden-onset double vision or gradually increasing occurrences of diplopia, intolerance to new varifocals when they have not been worn previously or in existing wearer of varifocal lenses, or a disc suspicious for glaucoma in someone with raised IOP or with low IOP.

These are not just repetitions of what was done previously, but iteratively improved versions based on mindful reflection of what worked, what did not work, and what could have been done better, so making use of hindsight that is notably cleverer than foresight.

Guidelines

Published guidelines, such as clinical management guidelines or the recommendations of NICE, are formalised versions of pre-packaged thinking done by other people. These are very helpful because it is always useful to consider the opinion of knowledgeable people, and these guidelines normally reflect the consensus view of a group of experts. However, while useful, treating these as rules to learn and dogmatically follow is unwise because they have inherent limitations and their recommendations have only rarely been validated with scientific rigour.

Firstly, there is a trade-off between applicability and specificity; guidelines must be written for groups of people and so cannot be tailored for any particular individual with their somewhat unique clinical presentation, situational considerations and personal values – no valid decision can disregard context.

Moreover, as when copying homework, reflexive adherence to guidelines restricts the depth of understanding that can only come about through active engagement with a problem and a creative exploration for its solution. When guidelines are used in place of personal thinking, there is a risk of dependence such that clinicians are unprepared for novel problems or atypical variations.

It is my personal opinion that guidelines are exceptionally useful as a summary of the consensus viewpoint, and sometimes evidence, and should dominate the behaviours of clinicians who have not had the opportunity to develop a deep understanding. When this is not the case, the appropriate use of guidelines is to feed into their more numerous and nuanced models that have been developed with ‘time in the game’ that are usually dominated by the specifics of the situation.

This is not too different from parents knowing that the determinates of their behaviour are more complex than the simple-to-articulate rules that they set out for their children. The rules are not wrong, rather they are a first approximation of how to act and are incomplete.

As with everything in life, our experience and depth of understanding varies in different domains. An expert in corneal disease cannot be assumed to be an expert in matters of children or dispensing. It follows that in some areas where a clinician is very familiar, it might be appropriate to view guidelines as a starting point to be developed, whereas in other situations where they have had less experience it is better to consider guidelines as instructive.

Respectful consideration of guidelines is always necessary, but this should never be confused with, or used as, an abdication of personal responsibility. The use of guidelines should also not be used as an excuse to downplay the need for effortful reflection which serves as the foundation for good clinical decision making and requires regular practice.

As an aside, while most guidelines are written as an advisory reference for clinicians, it cannot be ignored that their adoption for purposes of disciplinary action by regulatory bodies and civil courts is on the ascendancy. This reinforces the imperative for all clinicians not to ignore guidelines, but it remains the case that guidelines have not been accorded unchallengeable status in law and they have not usurped the role of an expert witness in court. Indeed, conversely, compliance with guidelines cannot be relied upon as a defence against liability if conduct is found to be negligent.

Clinicians are accountable for doing the right thing for their patients and not for their rigid adherence to guidelines.

Dilemmas of diagnosis

To diagnose is more than to describe a presentation, but rather to match a presentation to a pre-existing and culturally-agreed framework of categories. Making a diagnosis is a judgement of what diagnostic label is the best match. We need to make diagnoses because they guide our management and they act as a shorthand when we communicate with others.

All clinicians make diagnoses, but there is variation in their precision, accuracy, confidence and immutability. Precision refers to the narrowness or specificity of the ascribed diagnostic category. Accuracy reflects our track record; how often are we right and how often are we wrong. Confidence is our degree of certainty in being right. Immutability is the resistance to us changing our mind.

Every diagnosis is a working diagnosis

A diagnosis should never be considered final and something that is chiselled in stone. These statements apply not only to our own diagnoses, but also those of others – including more experienced optometrists and ophthalmologists.

Nobody is immune to being wrong. It may be that a previous decision was reasonable or that it was the most likely given the information available at the time. But as the facts change, we must be willing to change our minds. There is an analogous and charming term in software development, ‘perpetual beta’, which embraces a prototypic disposition where software is continually refined and a final version is never established.

The perils of precision

At its most simple level, a diagnosis is about judging whether there is something to worry about, or not. This is not too dissimilar to the NHS Sight Test requirement for the detection of abnormality; although, just because something is unusual does not mean there is anything to worry about, as with, for example, physiological anisocoria.

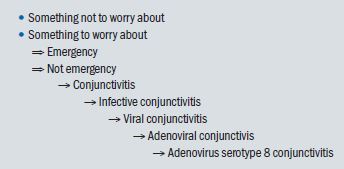

As the number of categories increases, and each category is deconstructed into multiple subcategories, our ‘diagnosis’ becomes more precise. A complete first expansion of our diagnostic categories is shown in figure 1, which also shows a selective serial expansion of the subcategory of ‘something to worry about’: conjunctivitis – infective conjunctivitis – viral conjunctivitis – adenoviral conjunctivitis – adenovirus serotype 8.

Figure 1: Increasing precision of diagnosis through successive expansion of categorisation

This successive expansion could continue, introducing more levels, and in doing so increasing diagnostic precision. However, it does not follow that the smaller diagnostic categories are increasingly useful. When the number of categories we are considering is far in excess of available management options, the point of having diagnoses to guide management and assist communication has been overshot. There is a sweet spot in the degree of precision that will vary with the context of specific situations.

Impatience for precision also has potential drawbacks in that it may prematurely close the mind to alternative diagnoses that were initially overlooked or considered unlikely. Our working memory can only handle about seven chunks of information and if we are so far down the viral conjunctivitis rabbit hole that we are obsessing about the adenoviral serotype, it is difficult to retreat and be open to the possibility that there may in fact be no viral infection, but rather that it is an atypical presentation of allergic eye disease.

A strategy to avoid the perils of over-eager precision in diagnosis is to consciously delay narrowing our focus and avoid becoming too specific too soon. In this regard, it is useful to make use of more general abstract diagnostic categories, such as self-limiting vs treatment required, urgent referral vs routine referral, disease mechanism and anatomical location. This is at least until the problem space contains a low enough number of options that to allow them to be considered individually.

Reducing problem space

Problem space describes a useful mental representation of all possibilities as a physical space where each possibility occupies some volume. Investigations can be visualised as an attempt to shrink problem space by excluding possibilities. Implicit in this approach is the acceptance that it is easier to exclude options than to confirm them. A great many possibilities can be excluded with certainty by an answer to a single question or test result, whereas supporting information is always provisional.

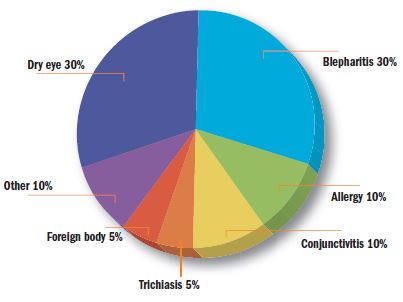

Figure 2: An estimation for the probabilities of possible causes of ocular surface discomfort

For example, trauma, contact lenses and medicamentosa cannot be the cause of an eye problem when there is no history of trauma, contact lenses or eyedrops, respectively. Knowing this allows us to exclude a large number of possible diagnoses. Also, accepting caveats and the odd exception, when one eye is involved the cause of irritation is very unlikely to be allergy, dry eye or blepharitis because these are conditions that invariably affect both eyes. Additional to monocularity/binocularity, knowing the duration is useful. Symptoms lasting more than a few days are not going to be due to a microbial keratitis because these get worse very quickly, and symptoms that started acutely yesterday are not going to be caused by dry eye that has an insidious onset.

An efficient investigation rapidly whittles problem space to a manageable size with an emphasis on excluding possibilities before moving on to confirmatory or discriminatory strategies.

Possibility vs probability

Symptoms or signs that can only be caused by one disease are said to be pathognomonic. Examples of eye disease with such characteristics include the appearance of a cherry red spot at the macula in the first few hours following a central retinal artery occlusion, or a dendritic ulcer with end bulbs in Herpes simplex epithelial keratitis.

However, these examples are the exception to the rule. Most symptoms and signs can result from a number of causes. A common example in practice is a customer experiencing tilted vision with new progressive lenses. This might be the consequence of an alteration to the astigmatic portion of their refraction, a change in the design of the progressive lens, incorrect lens centration or conversion of their dry AMD to wet AMD.

Clinicians are commonly trained to make a list of potential causes of the symptom or observed feature. These possibilities in summation make up the differential diagnosis. Most students resist thinking in this structured manner because it rubs against the common belief that our first thought is the best thought. Submitting to this practice helps reinforce the appreciation that different conditions overlap in how they present and mitigates against latching onto the first compatible option that comes to mind.

The next step is to recognise that not all causes are equally likely. There is a difference between possibilities and probabilities. The correct diagnosis is usually the most probable, and so it is useful to make an estimate of the probability of each option. In most circumstances it is sufficient to do this informally, rather than to use numbers. What is important is to grasp the utility of this methodological extension to non-ordered lists of differential diagnoses.

An estimation of the probabilities of possible causes of ocular surface discomfort is shown in figure 2. The exactness of the numbers is not as important as appreciating that the probabilities in the pie-chart have to add up to 100%. So if during the course of investigation more information is gathered that increases or reduces the size of any of these wedges, then all the others must alter in size too, either getting bigger or smaller. Knowing that somebody does not have a foreign body or inward growing eyelash increases the chance that they do have dry eye, whereas knowing that they are atopic increases the odds of allergic eye disease and so necessarily reduces the likelihood of dry eye.

This example illustrates the true power of exclusion in that it not only reduces the totality of problem space, but simultaneously increases the probability of the remaining options.

Outside-to-Inside thinking

Clinicians do well to consider the base-rate probabilities of their patient demographic before shifting the focus to specific features of the individual patient. This outside-to-inside thinking is deliberate, and is done to mitigate the distorting effects of the anchoring and representative heuristics.

The anchoring heuristic refers to our subconscious tendency to cling to the first thing that comes to mind. We cannot avoid anchoring; we do not choose to do it, we just do it, even when we know about it, but we can employ strategies to choose better anchors.

Population based anchors are more reliable than individual based anchors because atypical presentations of common disease are more common than typical presentations of rare diseases. For example, episcleritis that presents with features of scleritis is more common than scleritis, and headaches that occur on waking are more commonly related to stress than to a brain tumour.

The representative heuristic refers to our tendency to substitute how much something resembles a prototype that exists in our minds for how probable it is. In our clinics, it typically leads us to think that rare diagnoses are more common than they are and to overdiagnose these conditions.

Consider the following A patient presents in community practice with a diffusely red eye and complains of pain that causes them to wake from sleep. This description very much fits the classic presentation for scleritis, and with this knowledge most clinicians would consider the most probable diagnosis. However, scleritis is very rare in community and, in point of fact, is more likely to be an atypical presentation of episcleritis. A rare diagnosis demands more confirmatory evidence than a diagnosis that is more common.

Information toxicity

Clinicians need to gather information so they can understand what is going on and upon which to base decisions. The accepted wisdom is that more data confers a deeper understanding and allows for better decision making, and so we need to ask more questions and do more tests.

This is true up to a point. Certainly, knowing something is better than knowing nothing, but what is not so commonly appreciated is that the usefulness of information declines as more is accrued and thereafter additional information can have negative effects. More is not always better.

We cannot think about too much at once. Too much data will overload our minds and then we are more likely to overlook a salient piece of information. Information has a cost, not just in the difficulty of its acquisition and effort in its processing, but in its potential to displace what we already know. As with a healthy diet, it follows that we should be selective in what we consume and not binge on everything to hand. Good information is both relevant and novel.

Clinicians should only gather information if it has a potential predictive value that might change their thinking or change their behaviour. We do not count eyelashes because it is pointless. More pertinently, it is inefficient to continue looking for confirmatory evidence when we are already confident about a diagnosis. Rather in these circumstances, we should look for evidence that might disprove it.

As an example, when a person presents with symptoms highly suggestive of dry eye, which is a very common condition and so the base-rate probability tells us is very likely to be responsible, it is more efficient to focus the examination on excluding other conditions that can elicit similar grumblings, rather than conduct an extensive assessment of the tear film that will invariably find something amiss even in the absence of dry eye due to the high variability and false positive rate of these tests. And even if it does not, most clinicians are going to recommend lubricants anyhow.

As when trying to determine your location by triangulating bearings from three points on a hillside where the reference points need to be spatially distinct, when trying to triangulate a diagnosis we want our pieces of information to be independent. Much of the pieces of information in our clinics are not completely independent and are sometimes telling us the same thing but packaged differently.

For example, when considering the possibility of glaucoma, we may look at the optic nerve head, use an HRT to quantify the neuroretinal rim, assess the RNFL and ganglion cell complex with an OCT. All of these are useful, and they are somewhat different, but they are not completely independent – rather, they all describe related anatomy that, for diagnostic purposes, is either definitely normal, definitely abnormal, or suspect. To think that we cannot be wrong with extensive use of marvellous technology would be to succumb to the cultural malady of ‘techdazzlement’. In this case, it would be more efficient to pick one source of anatomical information and then combine this with other independent sources of information such as visual fields, tonometry and demographic risk factors.

The main problem of toxic data is not simply their adverse effects on outcomes, but that they poison self-awareness of fallibility. Paradoxically, the toxic effects of too much information are accompanied by an excess of misplaced confidence. This is potentially dangerous because it stops clinicians making allowances for being wrong.

Strategies for diagnosis

This section aims to put the above into a practical form as a general strategy for diagnosis, using as an example someone who presents with an uncomfortable eye.

Examination

First, think about what it is most likely to be using the base-rates of your population demographic. Second, exclude the really bad possibilities by asking about red-flag symptoms (pain, photophobia, poor vision), and then exclude infective keratitis, uveitis and acute angle closure glaucoma by examining the cornea, anterior chamber and measuring IOP.

Next, filter diseases with exclusion based on symptoms: One or both eyes?; monocular unlikely to be allergy, dry eye or blepharitis; binocular unlikely to be corneal infection or trauma. Duration?; more than three months can (almost) exclude viral conjunctivitis; more than three weeks can (almost) exclude bacterial conjunctivitis; more than three days can (almost) exclude infective keratitis; less than three weeks can (almost) exclude dry eye and blepharitis. Is there is history of trauma? Do they wear contact lenses? Do they use eyedrops?

Then, filter by the major disease mechanisms: trauma, infection, allergy, autoimmunity, extreme raised IOP, dessication, toxicity and neoplasia. Alternatively, or in combination, anatomical locations could be used to filter: adnexa, cornea, conjunctiva, anterior chamber, iris, crystalline lens, vitreous, retina, macula, optic nerve and brain.

Next, move on to pattern recognition, moving from outside to inside thinking, and then to confirmatory/discriminatory strategies.

Review

The final step in diagnosis is the review, which is crucially important before enacting management. Clinicians should consider if every symptom and observation has been accounted for. The possibility of an atypical presentation of another condition or of more than one condition should be entertained. It is also important to think beyond the eyes being examined and ask why the condition has occurred. Sometimes in our clinics we are witness to ocular manifestations of hitherto undiagnosed systemic disease that might also need to be investigated or managed.

It is a useful exercise for clinicians to humbly accept they may be wrong and to make this tangible by mentally estimating the probability of this being the case. It is never zero. Doing so often encourages making an allowance in the management plan for being wrong, perhaps with regards to scheduling a follow-up or possibly managing as a serious disease that is not considered the most probable, but cannot be satisfactorily excluded.

Dr Michael Johnson is a therapeutic optometrist working in independent practice in Gloucestershire.